Assessing robustness of real-time deep fake technology

Executive Summary

Threat actors are using real-time deep fake technology to circumvent personnel security controls for criminal purposes.

Simple attempts to disrupt real-time deep fakes, such as asking suspected criminals to put their hand in front of their face, have proven effective in some cases.

TYBURN research has demonstrated that more robust capabilities able to defeat such simple tests are well within the capabilities of criminal actors.

We can help you secure your organisation against complex threats.

On 4 February 2025, a viral LinkedIn post drew attention to the use of real-time deep fake technology by threat actors seeking to circumvent personnel security controls. The LinkedIn post included a video clip showing a screen recording during the interview. It was visible that the candidate was using a deepfake tool to overlay their face with another model.

Suspecting the use of deepfake technology, the interviewer asked the candidate to wave their hand in front of their face. The candidate was reluctant to do this, rightly suspecting that doing so would disrupt the real-time deep fake. The candidate’s refusal to comply with the request led the interviewer to terminate the call – a success for what might be called the ‘facepalm test’.

In previous articles, TYBURN examined the mechanics of these deep fake-enabled remote worker scams. This article – which is based on a longer research report – examines the robustness of real-time deep fake technology. Specifically, we examine whether the ‘face palm’ test is really effective in disrupting and detecting deep fake technology.

Real-time deep fake technology

With the abundance of ‘.ai’ websites popping up, there are many face swap tools available to use for free online that allow a user to overlay images onto their face in real time. However, these browser-based tools are limited and generally do not produce a convincing output. The browser-based tools visibly failed the facepalm test.

Current browser-based tools generally generate unconvincing deep fakes.

We experimented with DeepFaceLab, a popular GitHub repository, and DeepFaceLive, a Python-based implementation of this tool. There are several requirements to meet to run the tool successfully, with the most important being a powerful Nvidia or DirectX12 compatible graphics card.

Generating a convincing real-time deep fake requires experimentation. There are many factors to consider that can have an impact on the result. Facial hair and skin tone were important considerations; a close match enabled a more convincing deception.

Generating a more effective model requires experimentation.

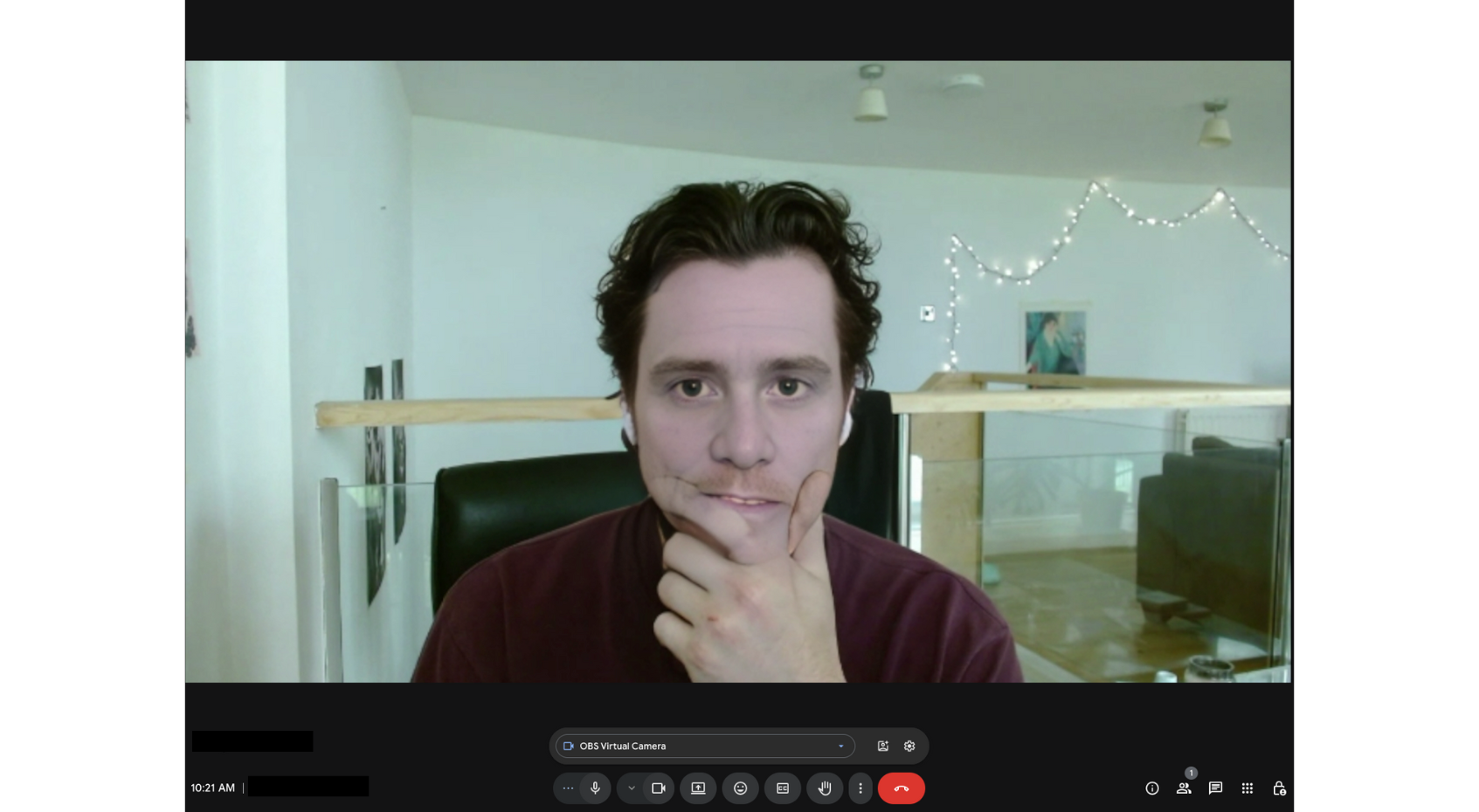

Testing demonstrated that the tool could convincingly accommodate the movement of the hand in front of the user’s face. Moving the hand at different speeds and manipulating the video processing delay increased the realism of the effect, allowing almost no distortion to be picked up on video.

With less than an hour and access to readily available computer power ithe facepalm test can be beaten.

Conclusion

Less sophisticated browser-based deep fake tools lack the realism and robustness to enable effective deception in video calls. They are more prone to obvious visual inconsistencies during basic facial movements or obstructions and lack image quality expected in a call. Although the facepalm test will disrupt these tools, it is likely to be redundant given the low level of realism these tools provide.

However, advances in open-source tooling mean that robust and realistic real-time deep fakes are readily within the capabilities of even moderately well resourced threat actors. With access to readily available computer power it is possible within an hour to develop a model that can withstand the face palm test with minimal distortion. For organisations at risk of targeting by motivated threat actors, this research demonstrates a clear need for personnel and technical security controls that can defeat deep fake enabled fraud.

Attempts to develop deepfake detection technology are likely to be caught up in an ongoing cycle of innovation between attackers and defenders. More important than technological solutions alone will be changes in organisations recruitment processes, with greater coordination early on between HR and security functions to develop vetting procedures, helping to identify risks in candidates before they escalate.

The full version of the report detailing our research into real-time deep fake technology is available on request.

TYBURN RECOMMENDATIONS

Empower people as your first line of security

Drive awareness of deep fake-enabled criminality and create a culture in which colleagues feel encouraged to report suspicions about potential deception.

Build security into recruitment processes

Organisational recruitment processes should be reviewed to ensure that HR and security functions are coordinating to identify and mitigate emerging technological threats.

Engage security experts to develop solutions tailored to your environment

Mitigating the threat from more advanced attackers will require integrating technological and personnel security processes and controls.

At Tyburn, we specialise at countering evolving threats to risk-sensitive organisations. Our experts bring experience in government, military, and academia to bear in delivering solutions to challenging problems.